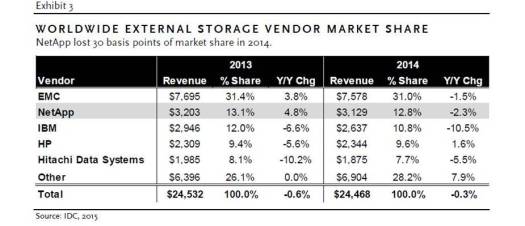

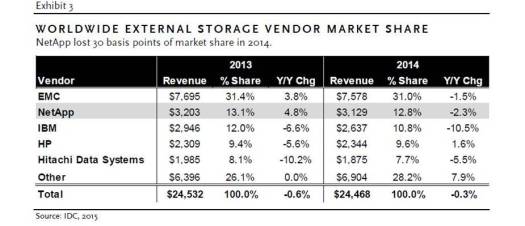

SAN manufacturers are in trouble.

IDC says that array vendor market share is flat despite continued massive growth in storage.

Hyper-convergence (HC) contributes to SAN manufacturer woes. The March 23, 2015 PiperJaffray research report states, “We believe EMC is losing share in the converged infrastructure market to vendors such as Nutanix.”

One of the most compelling advantages of HC is the cost savings. This is particularly evident when evaluated within the context of Moore’s Law.

Moore’s Law – Friend to Hyper-Convergence, Enemy to SAN

Moore’s Law, which states that the number of transistors on a processor doubles every 18 months, has long powered the IT industry. Laptops, the World Wide Web, iPhone and cloud computing are examples of technologies enabled by ever faster CPUs.

Moore’s Law in Action (via Igmur)

Moore’s Law in Action (via Igmur)

Innovative CPU manufacturing approaches such as increasing the number of cores, photonics and memristors should continue the Moore’s Law trajectory for a long time to come. The newly released Intel Haswell E5-2600 CPUs, for example, show performance gains of 18% – 30% over the Sandy Bridge predecessor.

Here are the 10 reasons why Moore’s Law is an essential consideration when evaluating hyper-convergence versus traditional 3-tier infrastructure:

1. SANs were built for physical, not virtual infrastructure.

Virtualization is an example of an IT industry innovation made possible by Moore’s Law. But while higher-performing servers, particularly Cisco UCS, helped optimize virtualization capabilities, arrays remained mired in the physical world for which they were designed. Even all-flash arrays are constrained by the transport latency between the storage and compute which does not evolve as quickly.

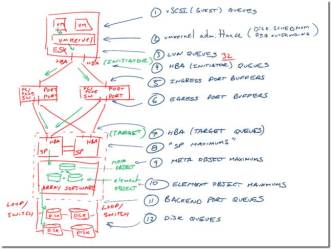

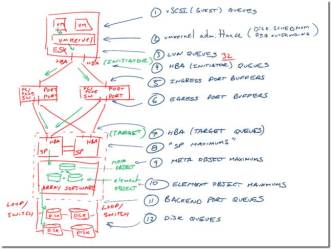

The following image from Chad Sakac’s post, VMware I/O queues, “micro-bursting”, and multipathing, shows the complexity (meaning higher costs) of supporting virtual machines with a SAN architecture.

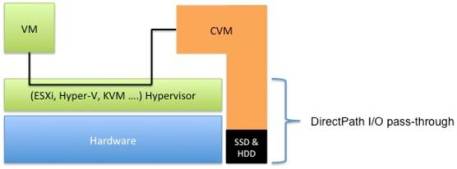

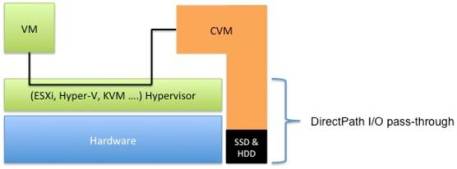

HC: Hyper-convergence was built from the ground up to host a virtualized datacenter (“Hyper” in hyper-convergence refers to “hypervisor”, not to “ultra”). The image below from Andre Leibovici’s post, Nutanix Traffic Routing: Setting the Story Straight, shows the much more elegant and efficient access to data enabled by HC.

2. Customers are stuck with old SAN technology even as server performance quickly improves.

A SAN’s firmware is tightly coupled with the processors; new CPUs can’t simply be plugged in. And proprietary SANs are produced on an assembly line basis in any case – quick retooling is not possible. When a customer purchases a brand new SAN, the storage controllers are probably at least one generation behind.

HC: HC decouples the storage code from the processors. As new nodes are added to the environment, customers benefit from the performance increases of the latest technology in CPU, memory, flash and disk.

Table 1 shows an example of an organization projecting a 20% increase in server workloads per year. The table also reflects a 20% density increase of VMs per Nutanix node – conservative by historical trends.

Fourteen nodes are required to support 700 VMs in Year 1, but only 8 more nodes support the 1,452 workloads in Year 5. And the total rack unit space required increases only 50% – from 8U to 12U.

Table 1: Example of decreasing number of nodes required to host increasing VMs

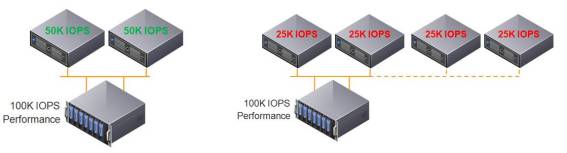

3. A SAN performs best on the day it is installed. After that it’s downhill.

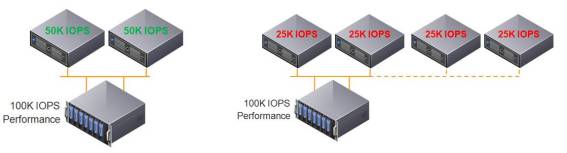

Josh Odgers wrote about how a SAN’s performance degrades as it starts scaling. Adding more servers to the environment, or even more storage shelves to the SAN, reduces the IOPs per virtualization host. Table 2 (from Odger’s post) shows how IOPs decrease per server as additional servers are added to the environment.

Table 2: IOPs Per Server Decline when Connected to a SAN

HC: As nodes are added, storage controllers (which are virtual), read cache and read/write cache (flash storage) all scale either linearly or better (because of Moore’s Law enhancements).

4. Customers must over-purchase SAN capacity.

When SAN customers fill up an array or reach the limit on controller performance, they must upgrade to a larger model to facilitate additional expansion. Besides the cost of the new SAN, the upgrade itself is no easy feat. Wikibon estimates that the migration cost to a new array is 54% of the original array cost.

In order to try and avoid this expense and complexity, customers buy extra capacity/headroom up-front that may not be utilized for two to five years. This high initial investment cost hurts the project ROI. Moore’s Law then ensures the SAN technology becomes increasingly archaic (and therefore less cost effective) by the time it’s utilized.

Even buying lots of extra headroom up-front is no guarantee of avoiding a forklift upgrade. Faster growth than anticipated, new applications, new use cases, purchase of another company, etc. all can, and all too frequently do, lead to under-purchasing SAN capacity. A Gartner study, for example, showed that 90% of the time organizations under-buy storage for VDI deployments.

HC: HC nodes are consumed on a fractional basis – one node at a time. As customers expand their environments, they incorporate the latest in technology. Fractional consumption makes under-buying impossible. On the contrary, it is economically advantageous for customers to only start out with what they need up-front because Moore’s Law quickly ensures higher VM per node density of future purchases.

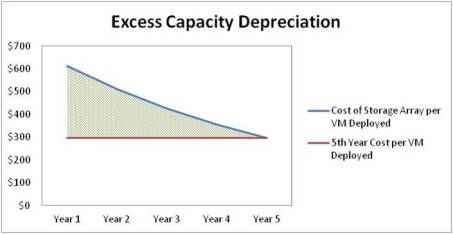

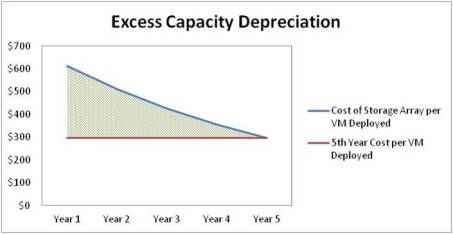

5. A SAN incurs excess depreciation expense

The extra array capacity a customer purchases up-front starts depreciating on day one. By the time the capacity is fully utilized down the road, the customer has absorbed a lot of depreciation expense along with the extra rack space, power and cooling costs.

Table 3 shows an example of excess array/controller capacity purchased up front that depreciates over the next several years.

Table 3: Excess Capacity Depreciation

HC: Fractional consumption eliminates requirement to buy extra capacity up-front, minimizing depreciation expense.

6. SAN “lock-in” accelerates its decline in value

The proprietary nature of a SAN further accelerates its depreciation. A Nutanix customer, a mortgage company, had purchased a Vblock 320 (list price $885K) one year before deciding to migrate to Nutanix. A leading refurbished specialist was only willing to give them $27,000 for their one-year old Vblock.

While perhaps not a common problem, in some cases modest array upgrades are difficult or impossible because of an inability to get the required components.

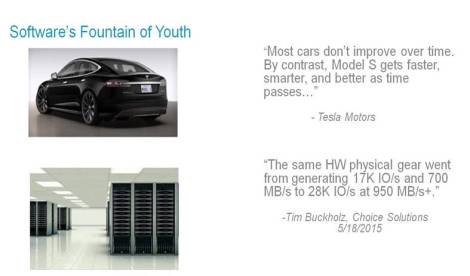

HC: An HC solution utilizing commodity hardware also depreciates quickly due to Moore’s Law, but there are a few mitigating factors:

- In a truly software-defined HC solution, enhancements in the OS can be applied to the older nodes. This increases performance while enabling the same capabilities and features as newer nodes.

- Since an organization typically purchases nodes over time, the older nodes can easily be redeployed for other use cases.

- If an organization wanted to abandon HC, it could simply vMotion/live migrate VMs off of the nodes, erase them and then re-purpose the hardware as basic servers with SSD/HDDs ready to go.

7. SANs Require a Staircase Purchase Model

A SAN is typically upgraded by adding new storage shelves until the controllers, or the array or expansion cabinets, reach capacity. A new SAN is then required. This is an inefficient way to spend IT dollars.

It is also anathema to private cloud. As resources reach capacity, IT has no option but to ask the next service requestor to bear the burden of required expansion. Pity the business unit with a VM request just barely exceeding existing capacity. IT may ask it to fund a whole new blade chassis, SAN or Nexus 7000 switch.

Table 4 shows an example, based upon a Nutanix customer, of a comparison in purchasing costs of a SAN vs. HC – assuming a SAN refresh takes place in year 4.

Table 4: Staircase Purchase of a SAN vs. Fractional Consumption of HC

HC: The unit of purchase is simply a node which, in the case of an HC solution such as Nutanix, is self-discovered once attached to the network and then automatically added to the cluster. Fractional consumption makes it much less expensive to expand private cloud as needed. It also makes it easier to implement meaningful charge-back policies.

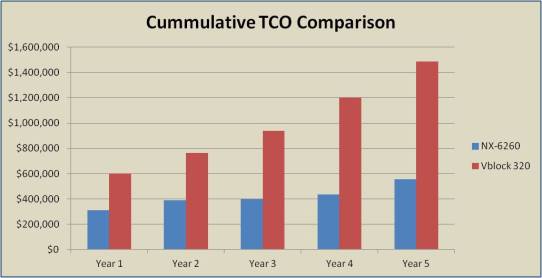

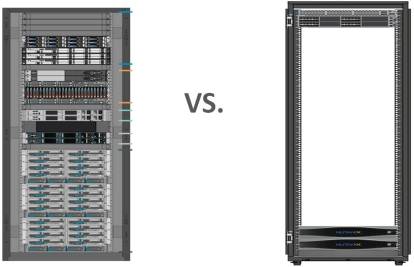

8. SANs have a Much Higher Total Cost of Ownership

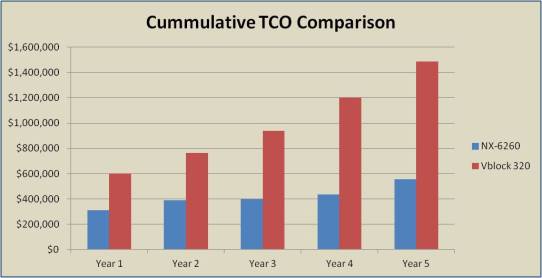

When evaluating the likely technology winner, bet on the economics. This means full total cost of ownership (TCO), not just product acquisition.

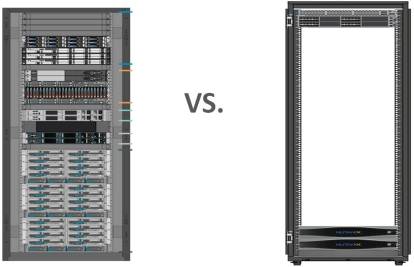

SANs lock customers into old technology for several years. This has implications beyond just slower performance and less capabilities; it means on-going higher operating costs for rack space, power, cooling and administration. Table 5 shows a schematic from the mortgage company mentioned above that replaced a Vblock 320 with two Nutanix NX-6260 nodes.

Table 5: Vblock 320 vs. Nutanix NX-6260 – Rack Space

Rack space, power and cooling costs are easy to calculate based upon model specifications. They, along with costs of associated products such as switching fabrics, should be projected for each solution over the next several years.

Administrative costs need to also be considered, but they are typically more difficult to gauge. They can also vary widely depending upon the type of compute and storage infrastructure utilized.

Some of the newer arrays, such as Pure Storage, do an excellent job at simplifying administration, but even Pure still requires storage tasks related to LUNs, zoning, masking, FC, multipathing, etc. And this doesn’t include all the work administering the server side. Here’s my recent post comparing upgrading firmware between Nutanix and Cisco UCS.

Table 6 shows the 5-year TCO chart for the mortgage customer including a conservative estimate of reduced administrative cost.

Table 6: TCO of Vblock 320 vs. Nutanix NX-6260

HC: In addition to slashed costs for rack space, power and cooling, HC is managed entirely by the virtualization team – no need for specialized storage administration tasks.

9. SANs have a higher risk of downtime / lost productivity

RAID is, by today’s standards, an ancient technology. Invented in 1987, RAID still leaves a SAN vulnerable to failure. In some configurations, such as RAID 5, two lost drives can mean downtime or even data loss.

Both disks and RAID sets are getting larger. Disk failures require longer rebuilds, increasing both risk to performance along with another failure taking out the set.

And regardless of RAID type, a failed storage controller cuts SAN performance in half (assuming two controllers). Lose two controllers, and it’s game over.

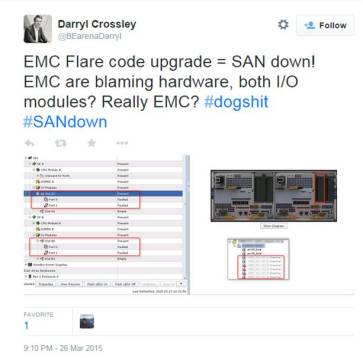

Sometimes unexpected events such as a water main breaking on the floor directly above the SAN can create failure. And firmware upgrades, in addition to being a laborious process, carry additional risk of downtime. Then there’s human error. Array complexity makes this a realistic concern.

As demands on the array increase over time, the older SAN technology becomes still more vulnerable to disruption or outright failure. Even temporary downtime can be very expensive.

HC: Rather than RAID striping, an HC solution such as Nutanix includes replication of virtual machines onto two or three nodes. A lost drive or even entire node has minimal impact as the remaining nodes rebuild the failed unit non-disruptively in the background. And the more nodes that are added to the environment, the faster the failed node is restored in the background.

10. Downsizing Penalty

Growth is not the only source of SAN inefficiency; downsizing can be a problem as well. Downsizing can result from decreased business, but also from a desire to move workloads to the cloud. The high cost and fixed operating expenses of a SAN make it difficult to justify reduced workloads.

HC: Customers can sell off or redeploy their older, slower nodes. This minimizes rack space, power and cooling expenses by only running the newest, highest-performance nodes. The software-defined nature of HC makes it easy to add new capabilities such as Nutanix’s “Cloud Connect” which enables automatic backup to public cloud providers.

The Inevitable Transition from SANs to HC

SANs were designed for the physical world, not for virtualized datacenters. The reason they proliferate today is that when VMware launched vMotion in 2003, it mandated, “The hosts must share a storage area network”.

But Moore’s Law marches relentlessly on. Hyper-convergence takes advantage of faster CPU, memory, disk and flash to provide a significantly superior infrastructure for hosting virtual machines. It will inevitably replace the SAN as the standard of the modern datacenter.

Thanks to Josh Odgers (@josh_odgers), Scott Drummonds (@drummonds), Cameron Stockwell (@ccstockwell), James Pung (@james_nutanix), Steve Dowling and George Fiffick for ideas and edits.